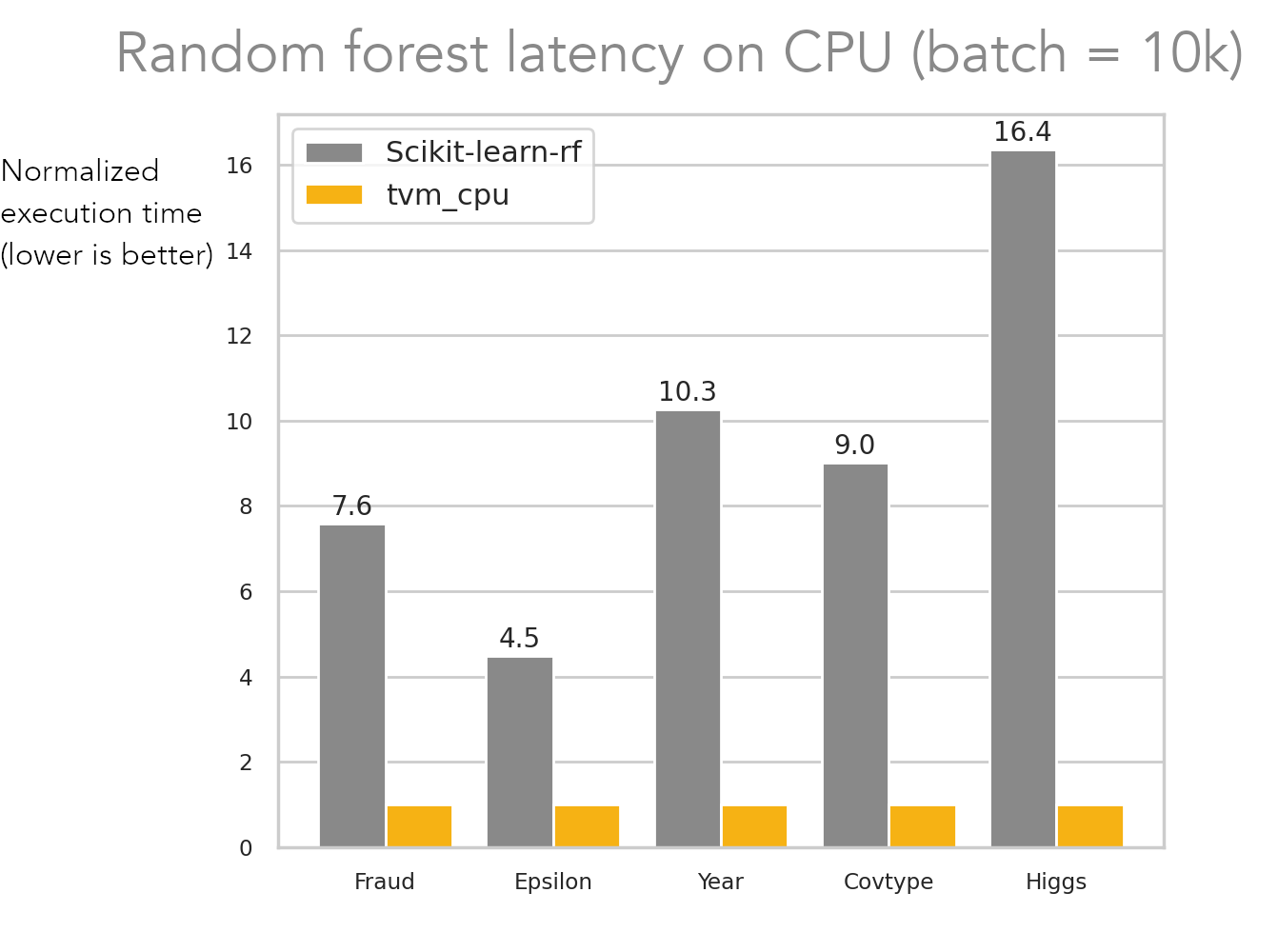

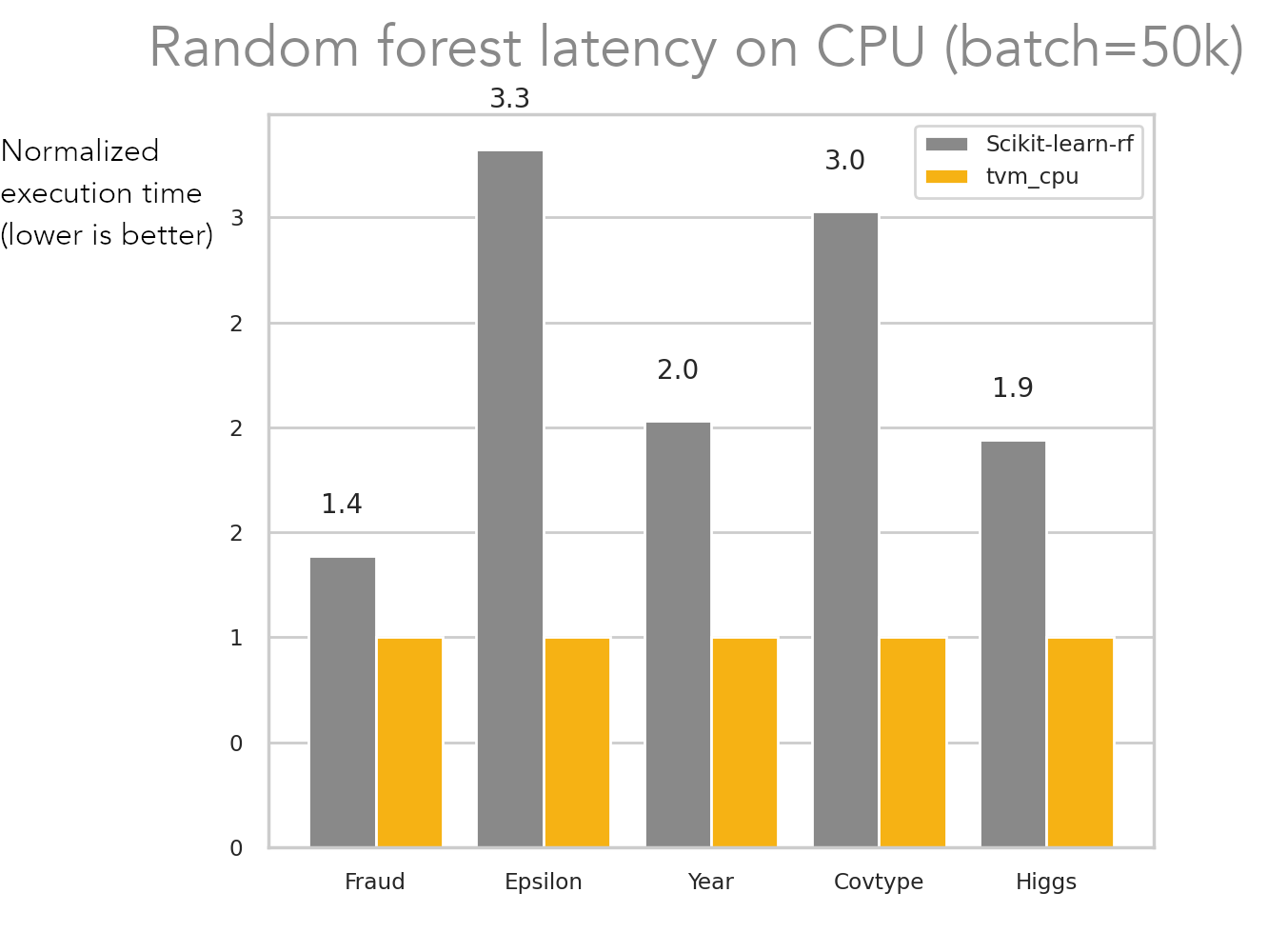

Speedup relative to scikit-learn over varying numbers of trees when... | Download Scientific Diagram

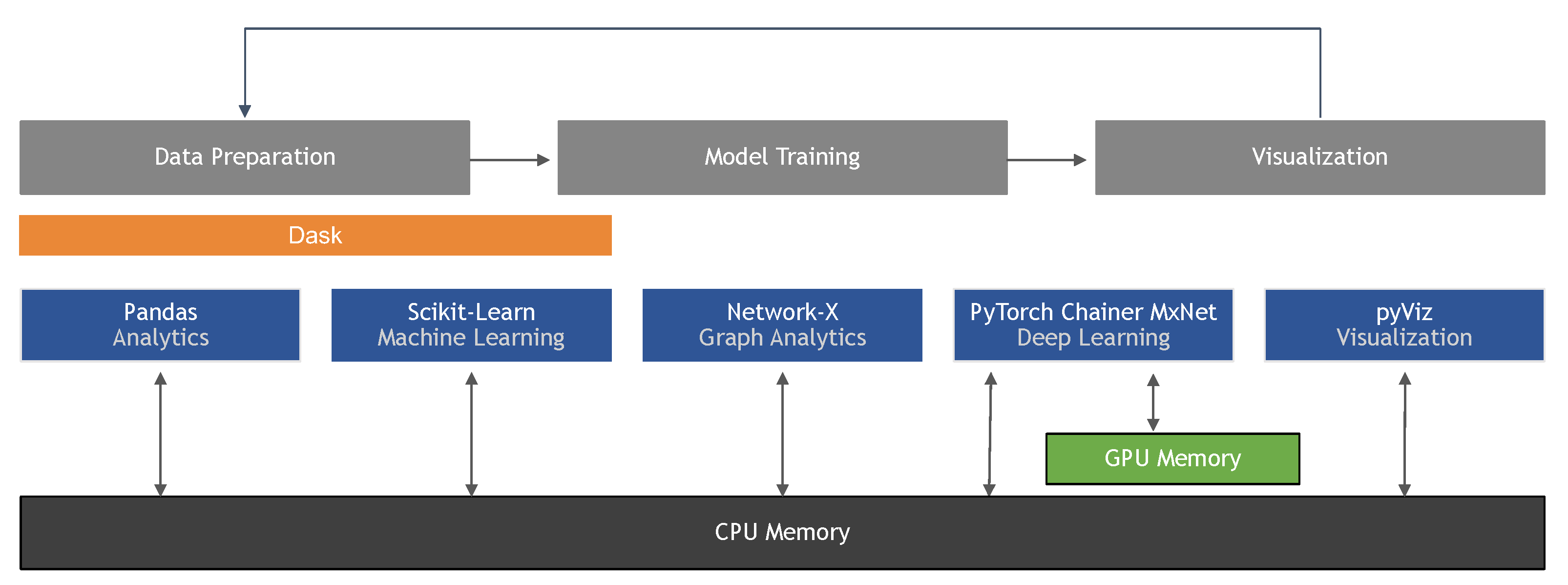

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence | HTML

Speedup relative to scikit-learn on varying numbers of features on a... | Download Scientific Diagram

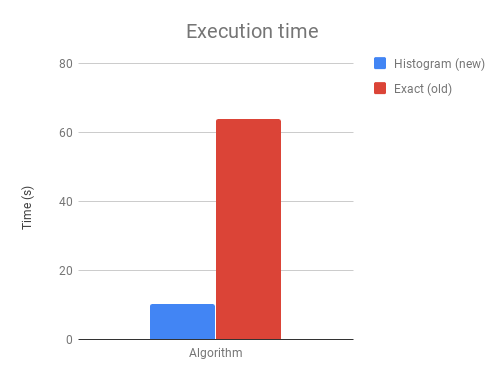

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science